Making time-lapse videos of beautiful buildings and landscapes, from entirely generated images proved to be tricky but possible. This post tells the story of how I made my first GAN-art pieces and took the first steps towards higher level AI control of deep generative systems.

See also: Etopia exhibition Gallery Collection NFTs

A new generative AI on the block

It was a stunning revelation to see that text-2-image generators had come of age and were able to create weird and wonderful images that were nothing like those in their training sets, indeed which were nothing like reality at all. With literally my first three text prompts with the amazing Colab notebook here (by the equally amazing Ryan Murdock: @advadnoun), I produced these images:

“A cityscape in the style of Mondrian”

“Melbourne skyline in pastel colours”

“Musicians in purple silhouette”

It was 27th January 2021, and I was hooked – these images were some of the most interesting and valuable I’ve seen from a generative art system, and all that was required as input were some text prompts. I really believe this is the biggest thing to hit the field of Computational Creativity in decades. I’ve since gone on to write my own version of the approach, generate more than 100,000 images with it (+ 100s of videos), write research papers and put on an exhibition of “imagined architectures” at Etopia in Zaragoza, Spain.

The generative approach which makes these images employs two pre-trained neural models. One is the generator, usually a generative adversarial network (GAN), and is able to produce a range of images. The other provides analytical support, able to take a pair of image/text-prompt and give a score for how well the image reflects the prompt and vice-versa: how well the prompt captures the nature of the image. This match-up means that the second model can guide the search for inputs to the first model, so that it outputs images which match a given text prompt.

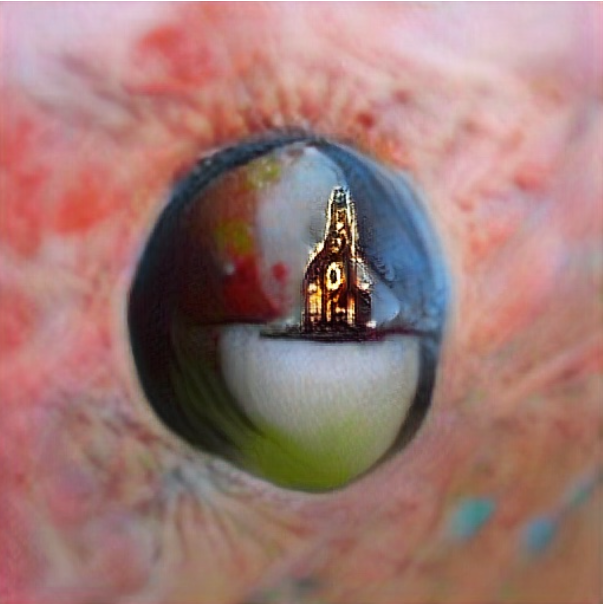

There has been an explosion of interest in combining generators and guidance models, but I’ve largely stayed loyal to the original pairing: BigGAN (from DeepMind) and CLIP (from OpenAI). I’ve put in a lot of effort to make the usage of the approach feel like a search engine which can return decent images for any text prompt. And, while I’ve experimenting from generating visualisations of everything from “Church in an eyeball” to “Crying dog” and “Beautiful vibrant flower” (see below), I’ve also concentrated on architecture more than anything else.

“Church in an eyeball”

“Crying dog”

“Beautiful vibrant flower”

Exquisite control

What I’ve been testing out is whether it is possible to exert exquisite control over BigGAN using CLIP. By that, I mean that I can reliably use the software in a controlled way, without having to resort to hoping for the best and opportunistically pouncing on any decent output (which happens a lot in generative art projects!) The reasons for wanting exquisite control stem from my interest in Computational Creativity. I want to hand over control of the CLIP+BigGAN process to a secondary AI system, but it takes a lot of general intelligence to be opportunistic, to see the best in bad output and to craft a project. If instead, the secondary system could use CLIP+BigGAN to supply images for given prompts reliably, then this opens up a whole world of higher-level computational creativity including the visualisation of automatically generated ideas, style invention, expressing the machine condition and lots more.

I limbered up with a couple of GAN-art projects to mint as NFTs on the Hic et Nunc platform:

These were fun to make, and they earned me a whopping zero tezos as NFTs! More importantly, they showed that it is possible to ask for quite specific things like vibrant flowers and miserable dogs and expect high-quality reliable yields. Moreover, I was able to play around with settings for the approach and by changing the learning rate, I could force the system to take either large jumps around the image search space, or tiny steps to fine tune an image according to quite specific requirements. I also was able to implement interpolation routines with fancy things like easing, and these looked good – although I had to come up with a way of avoiding the generation of dogs, as these are very common outputs from BigGAN (don’t ask…).

Slooooowly does it

It felt like I was ready to take on a substantial project, and come back to the architecture images which had so impressed me initially. I showed some of the images to Blanca Pérez Ferrer (@blancapferrer) at the Etopia Centre for Art and Technology, and she suggested that we could put together an online exhibition as part of their involvement in the Bauhaus 2 project. That really got the ball rolling. Blanca is the curator of the Etopia exhibition “VisionarIAs”, which celebrates the work of prestigious GAN-artists such as Mario Klingemann (@quasimondo), Sofia Crespo (@soficrespo91) and Helena Sarin (@neuralbricolage), so it was great to be working with Blanca.

I decided to produce something … slow. This was somewhat in response to the rise of NFTs and crypto art, where the aesthetic tends to be more vibrant, fast-moving and confrontational than other digital art forms. Of course, GAN-generated art can fit well in this genre, because it tends to produce pictures which are beautiful and eye-catching, but also arresting, sometimes grotesque and at other times dark and worrisome – perfect for a movement based around fast-moving social media and blockchain kudos. Trying to subvert all this led me to wanting to produce slow-paced video pieces capturing more traditionally beautiful scenes. Here are a couple of early time-lapse video pieces I managed to make:

So, you see the look of these pieces is quite different from the usual cryptoart aesthetics. And while there is a lot of classically beautiful GAN-art, it’s rare to see such contemplative pieces. I’ve always been fascinated by time-lapse videos, and their slow beauty felt like a perfect target for a generative art project. So, I came up with the phrase “GANlapse” for time-lapse videos where every frame is generated, and looked again at architectural drawings.

The technique

I decided to generate videos of GAN-generated architectural drawings embedding spectacular and inspiring buildings, but which have some realism because they change over the four seasons of a year. To make the still images for the Imagined Architectures exhibition, I used some quotes from well known architects as prompts for the AI system. For instance, I used the quote from Zaha Hadid: “Architecture is really about well-being”, and was surprised by the great images output from CLIP+BigGAN:

So, this was how I started in the production of the GANlapse videos. Instead this time of using the exact quotes from architects, I used simpler text prompts such as “Calm and beautiful modernist building” and “Serene architecture in woodland”, and I found the results were also really good. I tried about 20 different text prompts and produced 100 images for each. Importantly, I stored the latent vector input to BigGAN which produced each image. From the images produced, I cherry-picked around 50 to be the basis for the eventual GANlapse videos. Here’s a selection of those that I chose:

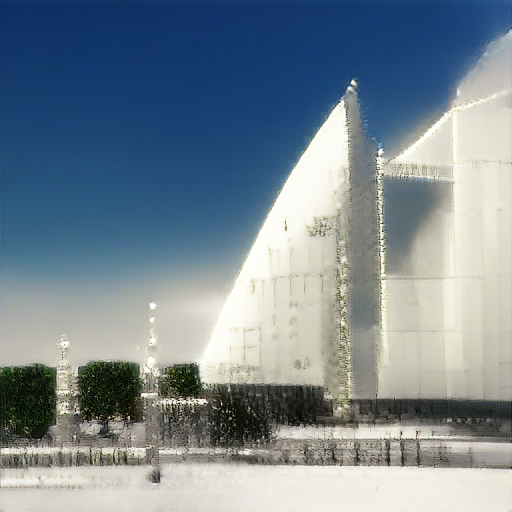

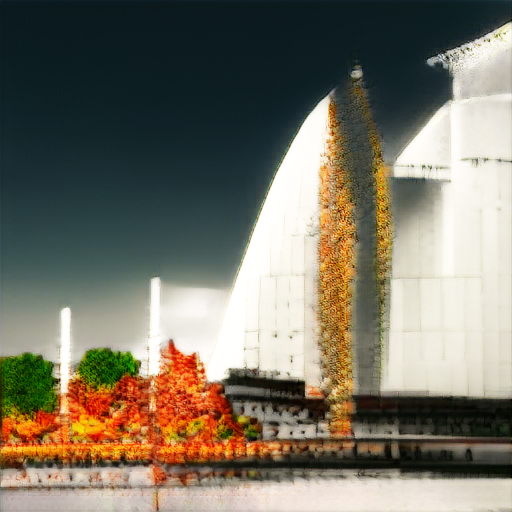

For each of the 50 images, (and there were many more that I could have chosen), I ran four more sessions with the CLIP-Guided BigGAN approach. This time, however, instead of the process initialising randomly, I got it to start with the latent vector that produced the original image, and I used a much smaller learning rate, so that only tiny steps away from the latent vector were made: this ensured that the images produced from the sessions would look a lot like the original, but would also reflect the text prompt. The four text prompts I used were variations on these: “Covered in snow”, “Spring flowers”, “Summer sun” and “Autumn leaves”, which would guide the generative process to produce variations on the original images. With a little prompt engineering, this worked really well. For instance, the results for the first image above were:

“Covered in snow”

“Spring flowers”

“Summer sun”

“Autumn leaves”

So, I finally had the art materials for the GANlapse pieces. The next stage of the technique involved producing a GAN interpolation between these four images, with 200 images calculated between each of the images. This is done by taking a weighted average of the entries in the latent vectors for each of the two images, and decreasing the weight of the first from 1 to 0 over the 200 waypoints. By taking the resultin images as the frames in a video, and using the amazing ffmpeg tool to sew them into an mp4, I produced the time lapse videos beautifully transitioning through the four seasons.

At some stage I realised that a lot of the pieces showed buildings that went off the side of the image, and that by simply reflecting over the y axis, I could produce landscape videos with the building mirrored – which is fine as many stunning buildings are symmetrical. As a final post-processing touch, I added a border around the GANlapse videos which feels like glass as it uses Gaussian blurring, as this seemed a modern way to make the pieces feel cherished in their own frame. The last thing to do was to provide names for each of the GANlapse videos, and I chose these to reflect an imagined usage for the beautiful buildings in their environment. For the one above, I chose “The Museum”, and here is the final GANlapse video for it:

In total, I produced 30 GANlapses for the Imagined Architectures exhibition at Etopia, from which the brilliant curator Blanca Pérez Ferrer (@blancapferrer) chose 13, along with lots of still images from those produced by inspiring quotes from female architects. Here are two more of my favourites from the GANlapse videos:

Please see the collection for the entire set of 30 GANlapse and 54 still images.

And please see “Arquitecturas Imaginadas” at Etopia for the curated exhibition of these works.

Here are couple of lovely four season GANlapses which aren’t included in the collection, as they are more abstract and less architectural in nature:

Is this all CC-ready?

In sturdy Computational Creativity (CC) style, I want to hand over various creative responsibilities in projects like this to a secondary AI system like The Painting Fool. It was clear that in certain contexts such as architectural drawings, it is possible to have fine-grained, reliable, control of the CLIP-guided BigGAN generation, which is a pre-requisite for AI control of this approach. However, I feel that there was still too much cherry picking, pivoting and opportunistic re-framing involved in the production of these GANlapse videos, requiring too much artistic input and general-level intelligence, to expect a secondary AI system to be able to control the generative process.

That said, I am enthused greatly by the prospects for CC with this approach. Indeed, my next project is going to be “Automatic Invention of Artistic Projects”, where I get The Painting Fool to choose subject matter (probably using trends on Twitter to spark this) and artistic styles which work well with the CLIP-Guided GAN generation process. I hoping to be surprised and inspired by what the AI system comes up with!

All images and videos are copyright Simon Colton